Alysa Buchanan, Ellis Anderson, and I designed this N.I.C.O. prototype for IBM through user testing and research. We were tasked with creating a system/device that assisted blind/visually impaired users with a daily task.

Research

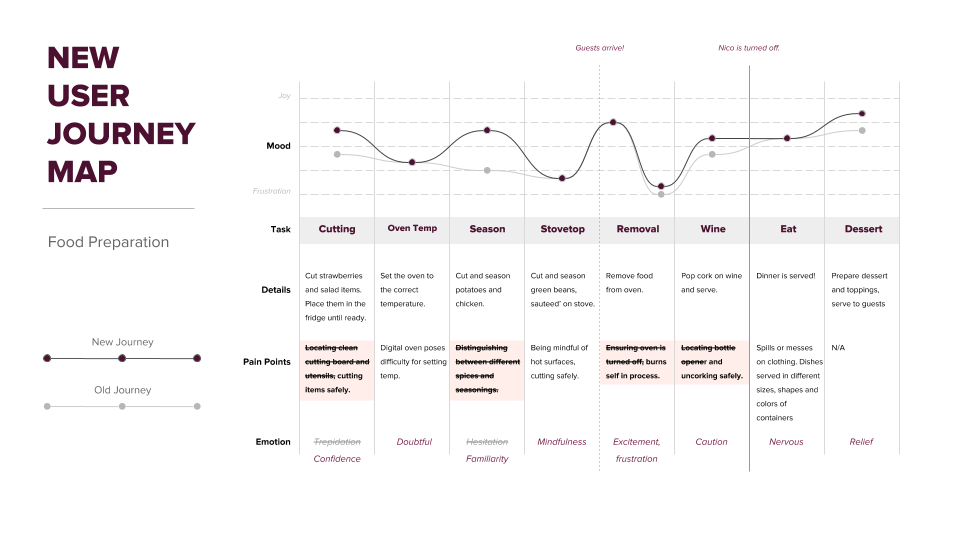

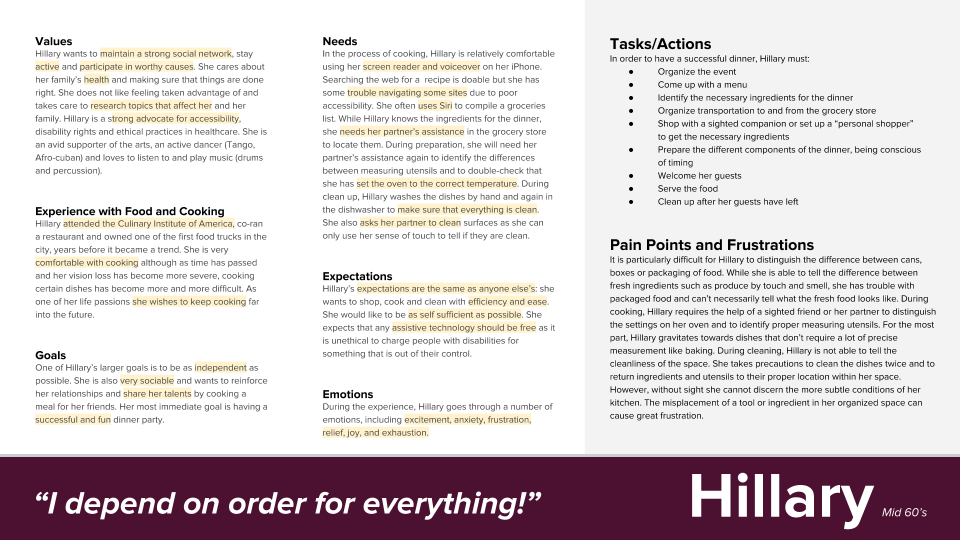

In order to be most effective, our research considered many facets. First we determined the journey of our user Hillary and followed her through her daily activities using journey maps to identify pain points. We identified her visual impairment (Stargardt's) and did research to further understand its implications.

Prototyping

From our research we considered potential hardware as we designed N.I.C.O. This system is a device worn at home for situational use, similar to that of reading glasses. It is paired with a smartphone and/or a smart speaker such as Alexa. Potential forms could exist as prescription/fashion eyeglasses (we designed the prototype in this form) or a frame attachment to existing glasses.

After the form was decided we considered and designed for technology including an infrared camera where the machine learning system can receive data to build its database and assist with tasks. The ear pieces would supply bone conduction for discrete assistive sound direction.

It was very important that the designed application to pair with N.I.C.O. and offload processing remained simple and easy to use. Color consideration takes effect as we considered what could be easily read and provides enough contrast for most visual impairments. The app also needed a voice based interface with calm detailed instructions for further accessibility and hands free use.

Above are the five key animation screens that the user receives from the phone app

Product Video

Above is a short video of the product experience which was given for presentation to the IBM design adviser team.